Introduction

The rapid evolution of AI and Machine Learning (ML) has given rise to innovative technologies. These technologies are reshaping industries as diverse as Healthcare and FinTech. One such emerging paradigm is the Retrieval-Augmented Generation. It is a methodology that combines the prowess of Large Language Models (LLMs) with external data sources. This combination delivers highly accurate, context-rich responses. As a solutions architect and cloud engineer with over 17 years of experience, I have witnessed firsthand how this approach can revolutionize AI applications. It can drive business value. It also opens up new frontiers of automation and intelligence.

In this blog, we will explore:

- The core principles behind RAG

- How Agentic network creation amplifies the capabilities of RAG

- Key use cases in Healthcare and FinTech

- A high-level overview of architecture and implementation strategies

By the end, you will have a comprehensive understanding of how this approach can solve real-world challenges, especially in regulated and data-intensive environments. Let’s delve in.

Understanding Retrieval-Augmented Generation

The Basics

Retrieval-augmented generation is an approach that leverages Large Language Models—such as GPT-based systems—to generate text. However, it has a crucial twist. Instead of relying solely on the model’s internal parameters, this method taps into external sources for information retrieval. This empowers the AI to ground its outputs in up-to-date, context-specific data, making the generated responses more reliable and relevant.

At its core, RAG operates in two stages:

- Retrieval: The model queries an external database. It searches through a search index or a knowledge base. The goal is to find the most relevant documents or data points.

- Generation: The LLM then uses that retrieved information to craft a contextually accurate, human-like answer.

This dynamic synergy mitigates some of the classical pitfalls of LLMs—namely, their tendency to hallucinate or provide outdated information. This approach effectively “refreshes” the AI’s knowledge on the fly, tailoring responses to each query’s unique demands.

AI, ML, and LLM Synergy

RAG sits at the intersection of AI and ML. The retrieval component can employ various ML-driven ranking algorithms or vector similarity searches, while the generation component relies on advanced LLMs. This synergy results in:

- High Accuracy: By incorporating real-time data or domain-specific knowledge, RAG reduces factual inconsistencies.

- Scalability: Retrieval systems can be sharded or replicated across multiple nodes in a cloud environment, ensuring rapid responses even under heavy load.

- Customizability: Each domain—be it Healthcare or FinTech—can have its own dedicated knowledge base, giving the system deep specialization.

Agentic Network Creation: The Next Evolution

What is Agentic Network Creation?

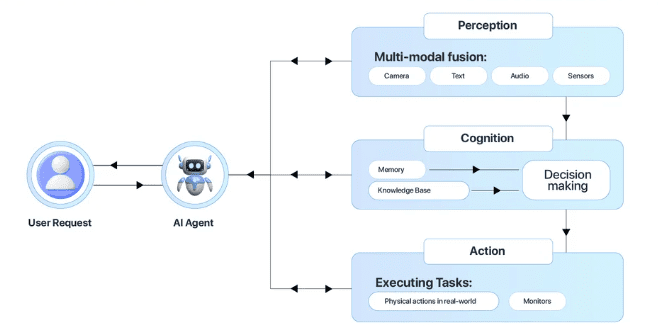

In the context of RAG, Agentic network creation refers to designing AI agents that collaborate, share data, and make decisions in a semi-autonomous manner. These agents “talk” to each other, forming a network that can handle complex tasks end-to-end—ranging from retrieving patient data in a hospital setting to automating loan approvals in FinTech.

How It Enhances RAG

Rather than limiting RAG to a single LLM attached to a single database, agentic networks let multiple specialized models coordinate, each with its own retrieval pipeline:

- Healthcare Example: One agent could be responsible for retrieving past medical histories, another for analyzing pathology reports, and a third for generating a patient-friendly summary.

- FinTech Example: In loan processing, one agent retrieves a user’s credit report, another calculates risk scores, and a third crafts a personalized loan offer—all in real time.

The result is an orchestrated workflow that enhances this methodology with specialized intelligence, driving more accurate and context-rich outcomes.

RAG in Healthcare and FinTech

Healthcare: Precision and Personalization

Challenge: In Healthcare, practitioners must sift through enormous amounts of data—electronic health records, research journals, and diagnostic images—while staying compliant with regulations like HIPAA.

RAG Solution:

- Precision Diagnosis: A RAG system can retrieve the latest scientific findings based on a patient’s symptoms, ensuring that doctors get evidence-backed recommendations.

- Patient Engagement: Personalized chatbots powered by RAG can offer instant answers to common patient questions, grounded in the individual’s medical history.

- Agentic Networks: Multiple agents can collaborate to fetch lab results, check medication histories, and propose treatment plans. This boosts the quality of care and clinical efficiency.

FinTech: Automating Workflows and Reducing Risk

Challenge: Financial transactions involve analyzing large datasets—historical trading data, fraud indicators, credit scores—while meeting stringent regulatory requirements.

RAG Solution:

- Risk Assessment: RAG can retrieve real-time market metrics, regulatory policies, and customer credit data to generate an accurate risk profile.

- Automated Support: Agentic networks can handle customer queries, policy reviews, and compliance checks simultaneously, reducing manual overhead.

- Fraud Detection: By dynamically retrieving data from transaction logs and user history, RAG-equipped systems can spot anomalies faster than rule-based approaches.

High-Level RAG Architecture

Data Ingestion Layer

All relevant data—medical records, financial documents, scientific research—is aggregated into a scalable storage system. In a cloud environment, this might involve:

- Object Storage (e.g., Amazon S3) for unstructured files

- Relational Databases (e.g., MySQL) for structured data

- NoSQL Databases (e.g., MongoDB) for semi-structured data

Indexing and Embeddings

Next, the data is indexed for efficient retrieval. Modern RAG systems often use vector embeddings generated by ML models. These embeddings capture semantic relationships, enabling the system to find contextually similar documents or data points even if exact keyword matches are absent.

Retrieval Pipeline

When a query is received—say, a user asks for the best treatment for a rare condition—the RAG system:

- Generates an Embedding of the query.

- Searches for top-matching documents in the vector store.

- Ranks and filters these documents based on relevance and authority.

Generation Layer (LLM)

The LLM then reads the retrieved documents and crafts a response. Depending on the domain, it may also reference regulatory guidelines or external APIs for real-time data (e.g., current financial regulations).

Agentic Network Coordination

In more advanced setups, multiple agents each handle specialized queries or tasks. An orchestration layer routes the user’s request to the appropriate agents, merges their outputs, and ensures compliance rules are respected.

Security and Governance

Especially in Healthcare and FinTech, data governance and privacy are paramount. Encryption at rest and in transit, access controls, and audit trails form a robust security layer. Cloud engineers can integrate these best practices using services like AWS KMS (Key Management Service). They can also use Azure Key Vault or GCP’s Secret Manager.

Implementation Strategies

- Pilot Projects: Start small with a proof of concept (PoC). For example, implement RAG in a single department within a hospital or for a specific product line in FinTech. Measure metrics like relevance, latency, and user satisfaction before scaling up.

- Cloud-Native Microservices: Break down RAG functionality into microservices—retrieval service, embedding service, generation service, etc. This approach simplifies scaling and allows your cloud infrastructure to handle spikes in traffic without service disruption.

- Observability and Monitoring: Deploy comprehensive logging, monitoring, and tracing tools. This ensures that if an issue arises—such as slow query times or data retrieval errors—you can quickly pinpoint the bottleneck.

- Continuous Model Updates: Both the retrieval and generation components rely on ML models that improve over time. Automate model deployment via CI/CD pipelines, ensuring your system remains up-to-date with the latest embeddings and LLM weights.

LLM Models to start with

Healthcare

FinTech

Why Engage a Seasoned Solutions Architect and Cloud Engineer?

With 17 years of experience in designing, implementing, and optimizing cloud infrastructures for AI/ML, I understand the intricacies of building scalable RAG solutions. I specialize in creating secure and future-proof solutions. Whether you’re aiming to enhance diagnostics in Healthcare or automate complex workflows in FinTech, a robust cloud architecture underpins success. This includes selecting the right data storage solutions, designing microservices for retrieval and generation, and implementing strict security measures to protect sensitive information.

Pro Tip: Don’t overlook the importance of domain-specific fine-tuning! For best results, LLMs and retrieval indices should be tailored to the language, regulations, and data formats unique to your industry.

Embrace RAG for a Smarter Future

Retrieval-Augmented Generation is more than just a buzzword. It’s a transformative methodology. This methodology integrates AI and ML with the latest LLM capabilities to deliver accurate, context-aware responses. By extending these capabilities with agentic network creation, businesses in Healthcare and FinTech can unlock unprecedented levels of efficiency, personalization, and intelligence.

The key to success lies in carefully orchestrating data ingestion, indexing, retrieval, and generation. With a cloud-native approach and a focus on security and scalability, RAG can become the cornerstone of your organization’s AI strategy. As a solutions architect and cloud engineer with nearly two decades of experience, I am passionate about helping organizations chart a course through this evolving AI landscape.

Ready to take your AI initiatives to the next level?

Let’s discuss how a custom RAG implementation—augmented by agentic network creation—can revolutionize your workflows.

Feel free to reach out for a consultation or further details, and let’s build the future of Healthcare and FinTech together.